Why AI Fails (and How to Avoid the Most Common Pitfalls with an AI Strategy)

- William Beresford

- Aug 8, 2025

- 4 min read

Artificial intelligence promises faster decisions, sharper customer insights, and a step-change in productivity. Yet despite the hype, many AI initiatives never deliver the value leaders expect. In fact, studies suggest that the majority of AI projects stall before reaching production - and even those that launch often fail to scale.

At Beyond, we’ve seen the same patterns play out across industries: ambitious plans, early excitement, and then roadblocks that derail momentum. The good news? Most AI failures are avoidable with the right foundations, governance, and change management.

In this article, we’ll unpack the most common reasons AI projects fail - and how to sidestep them.

1. No Clear Link between Business and AI Strategy

The pitfall:Too often, AI projects are driven by curiosity or competitive pressure rather than strategic alignment. A business unit might launch a chatbot because a competitor has one, without asking: Will this help us win in our market?

Why it matters:When AI doesn’t connect to clear business objectives, it’s hard to secure ongoing investment or measure impact.

How to avoid it:

Start every AI initiative with a strategic business question.

Define success metrics before building anything.

Secure C-suite sponsorship to ensure alignment with broader goals.

2. Chasing Technology, Not Value

The pitfall:Organisations get caught up in the novelty of the latest model or tool — generative AI, computer vision, voice agents — without considering the use case’s actual commercial value.

Why it matters:Technology-first projects risk becoming expensive science experiments. They may work technically but fail to deliver ROI.

How to avoid it:

Prioritise use cases with measurable business outcomes.

Use a Proof of Value → Pilot → Scale approach to minimise risk.

Keep the technology stack flexible so you can adapt as tools evolve.

3. Poor Data Readiness

The pitfall:AI models are only as good as the data they’re trained on. If data is siloed, incomplete, or poorly governed, the AI’s outputs will be unreliable.

Why it matters:Low-quality or inaccessible data slows development, creates bias, and can lead to poor decisions that erode trust in AI.

How to avoid it:

Assess data quality, observability, and governance early in the project.

Establish metadata tracking and lineage for transparency.

Invest in data engineering and integration to make AI-ready data available at scale.

4. Underestimating Governance and Risk

The pitfall:Organisations rush to deploy AI without considering regulatory compliance, ethical principles, or explainability requirements.

Why it matters:A lack of governance can lead to reputational damage, legal action, and loss of customer trust.

How to avoid it:

Define AI governance frameworks before deployment.

Address bias, transparency, and accountability from day one.

Engage risk, compliance, and legal teams early.

5. Fragmented Delivery Model

The pitfall:AI is treated as a side project in one department, without integration into enterprise processes or alignment with IT, analytics, and operations.

Why it matters:Without an integrated delivery model, AI solutions remain isolated and fail to scale.

How to avoid it:

Establish AI communities of practice and cross-functional teams.

Embed AI into the operating model, with clear ownership and decision rights.

Use common platforms, tools, and standards for development.

6. Failing to Bring People Along

The pitfall:Leaders underestimate the cultural change required for AI adoption. Employees see AI as a threat, or simply don’t understand how to use it.

Why it matters:Without adoption, even the best AI solutions deliver zero value.

How to avoid it:

Invest in AI literacy programs to build understanding and trust.

Create champions in each business area.

Communicate early and often about the why, what, and how of AI initiatives.

7. No Plan for Scaling Beyond the Pilot

The pitfall:Organisations run successful pilots but fail to plan for the operational realities of scaling: infrastructure, monitoring, retraining models, and integrating outputs into workflows.

Why it matters:Without a scale plan, AI remains trapped in pilot mode, never delivering enterprise-wide impact.

How to avoid it:

Design for scale from the start, even in Proof of Value stages.

Implement ModelOps and monitoring processes to keep AI outputs accurate over time.

Budget for ongoing operational costs, not just development.

How Beyond Helps Organisations Avoid AI Failure

We work with organisations across sectors to:

Align AI initiatives to business strategy and measurable value.

Build AI-ready data foundations and governance frameworks.

Create integrated operating models that scale AI across functions.

Support cultural adoption so teams embrace AI as a partner, not a threat.

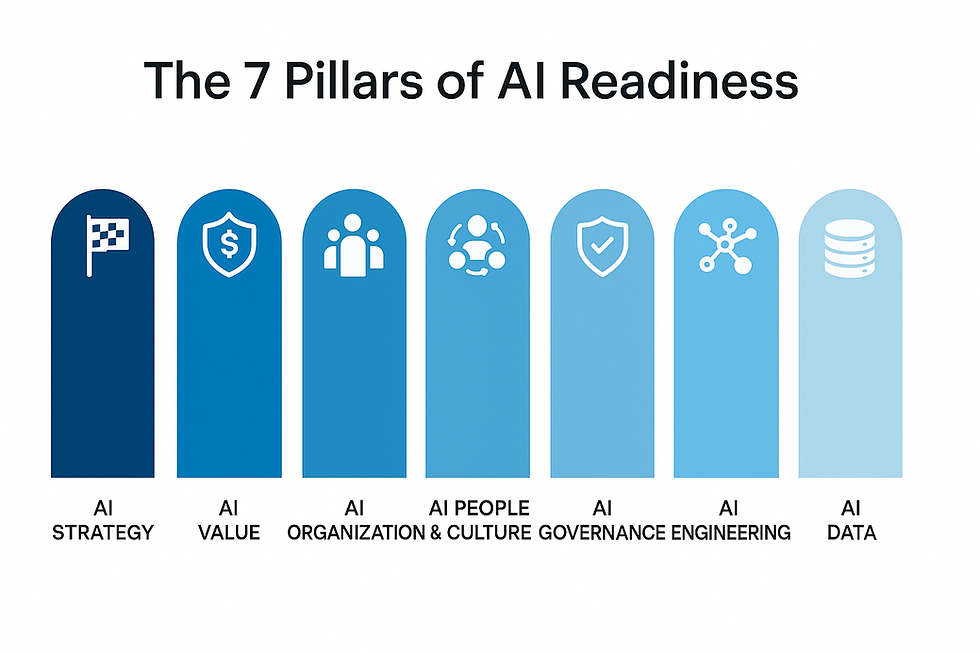

Our approach blends AI strategy, engineering, governance, and change management — ensuring that AI not only launches successfully but continues to deliver value.

The Bottom Line

AI failure isn’t inevitable. By focusing on strategic alignment through your AI strategy, data readiness, governance, delivery, and adoption, you can dramatically increase your chances of success.

The key takeaway?AI success is rarely about having the most advanced algorithms — it’s about building the right foundations and engaging the right people.

→ If you’re planning or rescuing an AI initiative, talk to us about our AI Readiness Diagnostic and implementation support.